· Shane Trimbur

When GPT Spills the Tea: A DSPM Roadmap for Securing AI Agents

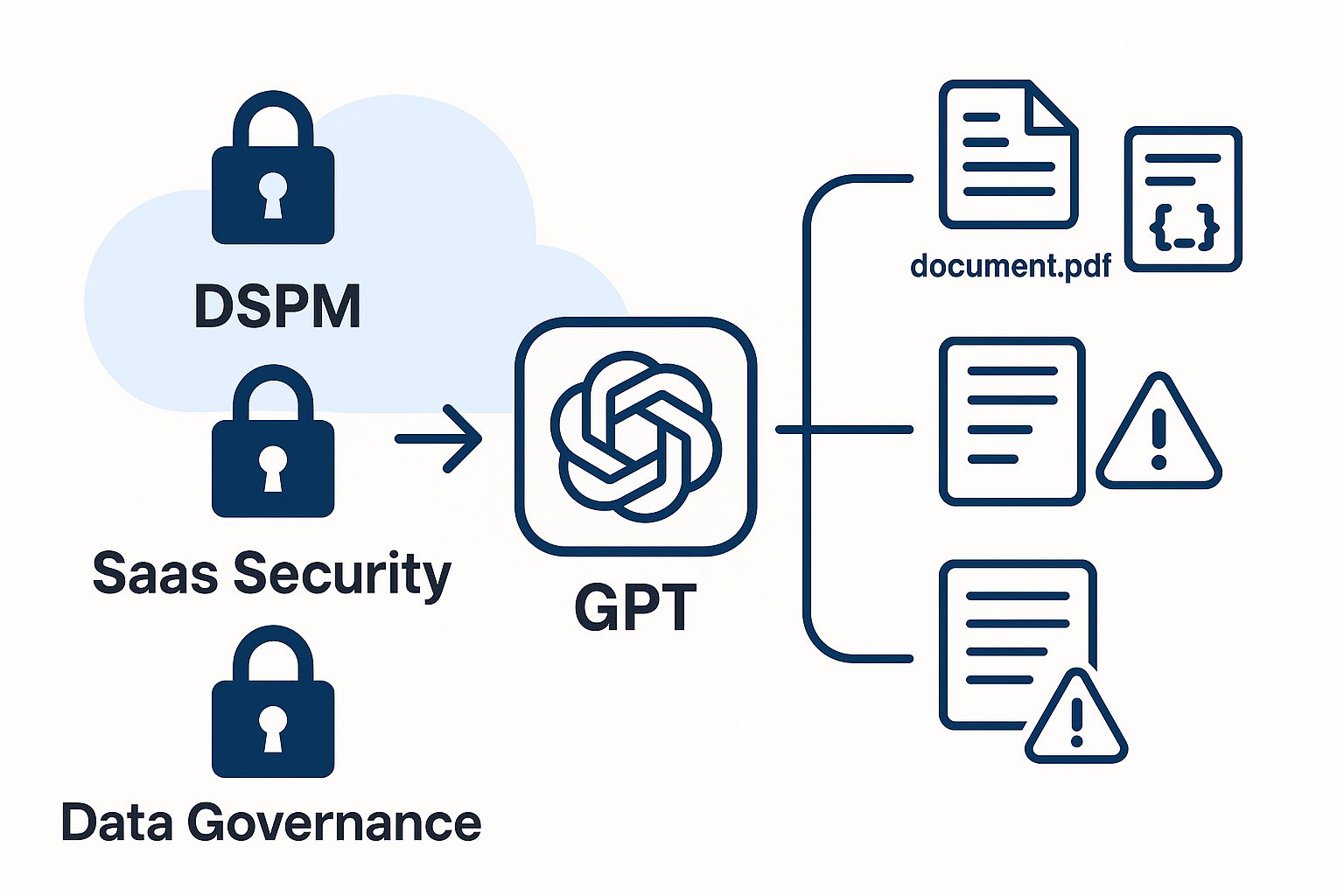

A new study reveals how GPTs can leak sensitive file data through five distinct vectors. Here's how experts in DSPM, SaaS security, and data governance can apply their skills to mitigate real-world LLM risks.

When GPT Spills the Tea: A Deep Dive into Knowledge File Leakage in Custom GPTs

Understanding the security implications when AI assistants reveal more than they should

The Hidden Vulnerability in Plain Sight

Custom GPTs have revolutionized how we interact with AI, allowing developers to create specialized assistants loaded with proprietary knowledge files. But what happens when these AI systems inadvertently reveal the very information they were designed to protect? A groundbreaking research paper, “When GPT Spills the Tea: Comprehensive Assessment of Knowledge File Leakage in GPTs”, has systematically uncovered a concerning phenomenon: knowledge file leakage in GPTs, where sensitive training data can be extracted through carefully crafted prompts.

This isn’t just a theoretical concern. The research demonstrates that organizations worldwide may be inadvertently exposing confidential documents, internal processes, and proprietary information to anyone who knows how to ask the right questions. The study provides comprehensive empirical evidence of these vulnerabilities and their real-world implications.

What Are Knowledge Files and Why Do They Matter?

Knowledge files are the backbone of custom GPTs. These documents contain:

- Proprietary business intelligence - Internal reports, strategies, and competitive analyses

- Technical documentation - API specifications, system architectures, and troubleshooting guides

- Training materials - Employee handbooks, process documentation, and best practices

- Customer data - Support tickets, user feedback, and interaction histories

- Legal documents - Contracts, compliance requirements, and policy documents

When organizations upload these files to create specialized GPTs, they expect the AI to reference this information contextually while keeping the raw data secure. The reality, however, is more complex.

The Anatomy of a Knowledge File Leak

As documented in the comprehensive research study, knowledge file leakage occurs through several attack vectors:

Direct Extraction Attacks

Attackers use prompts designed to bypass safety measures and extract raw file contents. Common techniques include:

- Prompt injection - Crafting inputs that override system instructions

- Role-playing scenarios - Convincing the GPT to act as a different entity with different rules

- Incremental extraction - Gradually pulling information through seemingly innocent questions

- Format manipulation - Requesting data in specific formats that bypass filters

Indirect Information Disclosure

Even when direct extraction fails, attackers can still gather sensitive information through:

- Inference attacks - Deducing protected information from AI responses

- Contextual leakage - Extracting metadata about file structures and contents

- Behavioral analysis - Understanding system capabilities and limitations through interaction patterns

Real-World Impact: When Theory Meets Practice

The consequences of knowledge file leakage extend far beyond academic curiosity. The research paper demonstrates through empirical testing that these vulnerabilities have tangible real-world implications. Consider these scenarios:

Corporate Espionage: A competitor creates prompts that extract a company’s strategic planning documents from their customer service GPT, gaining insights into future product launches and market strategies.

Compliance Violations: Healthcare organizations face HIPAA violations when their GPTs inadvertently reveal patient information embedded in training documents.

Intellectual Property Theft: Software companies lose competitive advantages when their GPTs leak proprietary algorithms, code snippets, or development methodologies.

Personal Privacy Breaches: Individual users discover their private conversations and data have been incorporated into knowledge files and can be accessed by other users.

The Technical Challenge: Why Traditional Security Falls Short

Standard cybersecurity approaches struggle with GPT knowledge file leakage because:

The Black Box Problem

Unlike traditional databases with clear access controls, GPTs operate as black boxes. It’s difficult to audit what information they might reveal or under what circumstances.

Dynamic Context Windows

GPTs can reference multiple knowledge files simultaneously, creating unexpected combinations and correlations that weren’t anticipated during deployment.

Natural Language Complexity

Traditional security tools excel at detecting structured attacks but struggle with the nuanced, conversational nature of prompt-based exploits.

Emergent Behaviors

As GPTs become more sophisticated, they develop unexpected capabilities that can be exploited in ways their creators never intended.

Detection and Assessment Strategies

Organizations need comprehensive approaches to identify knowledge file leakage:

Automated Testing Frameworks

- Prompt fuzzing - Systematically testing various prompt patterns to identify vulnerabilities

- Behavioral monitoring - Tracking GPT responses for unexpected information disclosure

- Content analysis - Scanning outputs for sensitive data patterns and keywords

Manual Security Audits

- Red team exercises - Simulating attacker behaviors to identify potential leakage points

- Penetration testing - Attempting to extract information through various attack vectors

- Compliance reviews - Ensuring GPT deployments meet regulatory requirements

Continuous Monitoring

- Real-time analysis - Monitoring live interactions for potential security incidents

- Anomaly detection - Identifying unusual patterns in GPT behavior or user interactions

- Incident response - Rapidly addressing discovered vulnerabilities or breaches

Mitigation Strategies: Building Secure Custom GPTs

Data Sanitization and Preparation

Before uploading knowledge files, organizations should:

- Remove sensitive identifiers - Strip personal information, account numbers, and proprietary codes

- Implement data classification - Categorize information by sensitivity level and access requirements

- Create filtered datasets - Prepare sanitized versions of documents for GPT consumption

- Establish retention policies - Define how long information should remain accessible

System Architecture Security

- Access controls - Implement robust authentication and authorization mechanisms

- Isolation techniques - Separate different types of knowledge files and user groups

- Audit logging - Track all interactions and information access patterns

- Fail-safe mechanisms - Ensure systems default to secure states when errors occur

Prompt Engineering Defenses

- Safety instructions - Embed clear guidelines about information sharing in system prompts

- Response filtering - Implement post-processing to catch potential leakage before user delivery

- Context limiting - Restrict the scope of information available for any single interaction

- Adversarial testing - Regularly test systems against known attack patterns

The Regulatory Landscape: Compliance in the Age of AI

As knowledge file leakage becomes more recognized, regulatory bodies are beginning to address AI-specific security requirements:

Existing Frameworks

- GDPR implications - How personal data protection laws apply to AI training data

- HIPAA considerations - Healthcare-specific requirements for AI systems handling patient information

- SOX compliance - Financial reporting implications of AI-driven business processes

- Industry standards - Sector-specific guidelines for AI security and data protection

Emerging Regulations

- AI governance frameworks - New laws specifically addressing AI system security and accountability

- Data residency requirements - Restrictions on where AI training data can be stored and processed

- Algorithmic transparency - Mandates for explainable AI systems and audit trails

- Incident disclosure - Requirements for reporting AI security breaches and vulnerabilities

Looking Forward: The Future of GPT Security

The landscape of GPT security is rapidly evolving. Several trends are shaping the future:

Technical Innovations

- Advanced encryption - Protecting knowledge files even when processed by AI systems

- Differential privacy - Mathematical guarantees about information disclosure limits

- Federated learning - Training AI systems without centralizing sensitive data

- Homomorphic encryption - Enabling computation on encrypted data without decryption

Industry Standards

- Security frameworks - Standardized approaches to AI system security assessment

- Certification programs - Third-party validation of AI security implementations

- Best practice guidelines - Industry-wide recommendations for secure AI deployment

- Incident sharing - Collaborative approaches to identifying and addressing new threats

Organizational Adaptations

- AI security teams - Specialized personnel focused on AI-specific security challenges

- Risk assessment frameworks - Structured approaches to evaluating AI security risks

- Governance structures - Organizational processes for managing AI security and compliance

- Training programs - Educating staff about AI security risks and mitigation strategies

Practical Steps for Organizations

Immediate Actions

- Audit existing GPTs - Identify all deployed systems and their knowledge files

- Implement monitoring - Set up basic logging and alerting for unusual activities

- Review permissions - Ensure appropriate access controls are in place

- Create incident response plans - Prepare for potential security breaches

Medium-term Initiatives

- Develop security policies - Create comprehensive guidelines for AI system deployment

- Implement testing frameworks - Establish regular security assessments

- Train personnel - Educate teams about AI security risks and best practices

- Enhance monitoring - Deploy sophisticated detection and analysis capabilities

Long-term Strategy

- Invest in research - Stay current with emerging threats and mitigation techniques

- Build partnerships - Collaborate with security vendors and research institutions

- Influence standards - Participate in industry efforts to establish security frameworks

- Continuous improvement - Regularly update security measures based on new discoveries

Conclusion: Balancing Innovation with Security

The phenomenon of GPT knowledge file leakage represents a new frontier in cybersecurity. As organizations increasingly rely on AI systems to process and deliver information, the potential for inadvertent disclosure grows. The challenge isn’t to abandon these powerful tools, but to understand their risks and implement appropriate safeguards.

Success requires a multi-faceted approach combining technical solutions, organizational processes, and regulatory compliance. Organizations that proactively address these challenges will be better positioned to harness the benefits of AI while protecting their sensitive information.

The era of AI-powered business operations is here to stay. The question isn’t whether to adopt these technologies, but how to do so securely. By understanding the risks of knowledge file leakage and implementing comprehensive mitigation strategies, organizations can confidently embrace the future of AI while keeping their most valuable information secure.

As we move forward, the cybersecurity community must continue researching, sharing knowledge, and developing new techniques to address these evolving threats. The stakes are high, but with proper preparation and vigilance, we can ensure that when GPTs spill the tea, it’s intentional and controlled, not accidental and catastrophic.

This analysis is based on the comprehensive research findings from “When GPT Spills the Tea: Comprehensive Assessment of Knowledge File Leakage in GPTs” and current industry practices. As the field of AI security continues to evolve, organizations should stay informed about new developments and adjust their security strategies accordingly.